In a significant stride toward fortifying smart power grids against an array of cyber-physical threats, researchers have developed a novel deep reinforcement learning framework that promises to revolutionize grid resiliency. Published in the IEEE journal “Access” (translated from the original title), this research, led by Boyu Wang from the School of Intelligence Science and Technology at Beijing University of Civil Engineering and Architecture, introduces an adaptive control architecture designed to enhance the stability and security of modern power systems.

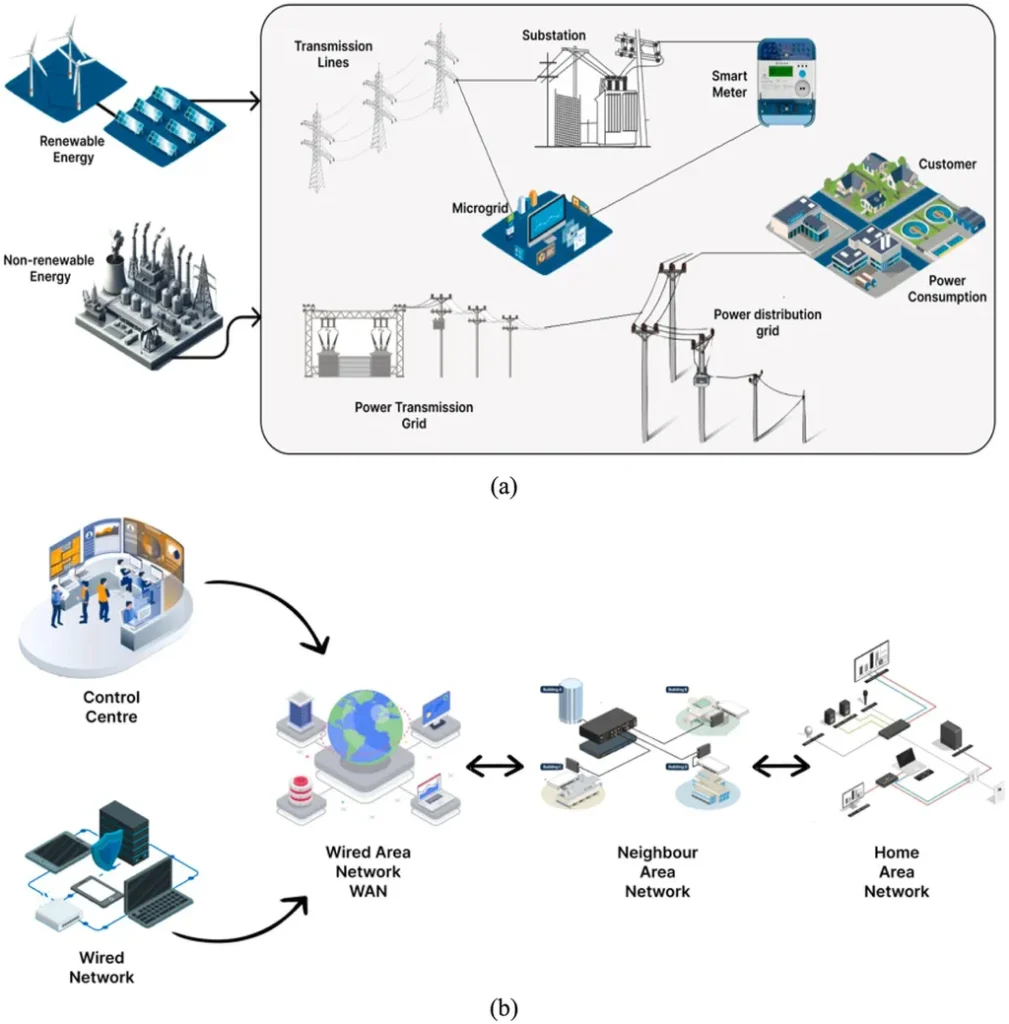

The study addresses a pressing challenge in the energy sector: the vulnerability of smart grids to cyber-attacks, system faults, and dynamic load variations. Traditional control methods, such as Proportional-Integral-Derivative (PID) controllers, often fall short in adapting to the evolving nature of these threats. “Our framework leverages the power of deep reinforcement learning to provide a robust and adaptive solution,” Wang explains. “By integrating the Deep Deterministic Policy Gradient (DDPG) algorithm with the Root Mean Square Propagation (RMSprop) optimizer, we’ve created a system capable of real-time, continuous control of distributed energy resources, microgrids, and control centers.”

The proposed framework features a two-layered control architecture that models grid states, actions, and rewards to enable rapid response to disturbances. This innovative approach not only enhances system stability and control accuracy but also significantly improves convergence speed and resiliency scores. “The key to our success lies in the comprehensive reward design that reflects resiliency metrics and the resiliency adaptation layer that ensures swift reaction to threats,” Wang adds.

The research demonstrates superior performance compared to classical PID controllers and other reinforcement learning methods like Deep Q-Network (DQN) and Proximal Policy Optimization (PPO). Simulation results on a realistic smart grid testbed highlight the framework’s potential for practical deployment, offering a scalable and intelligent solution to the energy sector’s growing challenges.

The implications of this research are far-reaching. As smart grids become increasingly complex and interconnected, the need for adaptive and resilient control systems becomes paramount. This framework could pave the way for more secure and stable power distribution, ultimately benefiting both energy providers and consumers. “Our goal is to create a more resilient energy infrastructure that can withstand the challenges of the future,” Wang states.

By providing a robust solution to enhance smart grid resiliency, this research not only addresses current cyber-physical threats but also sets the stage for future advancements in the field. As the energy sector continues to evolve, the integration of deep reinforcement learning frameworks like this one could become a cornerstone of modern power system management, ensuring a more reliable and secure energy future for all.