Researchers from the Institute of Automation, Chinese Academy of Sciences, led by Sicheng Shen and Mingyang Lv, have developed a new framework called TEFormer that aims to improve the temporal modeling capabilities of Spiking Transformers, a type of energy-efficient neural network architecture.

Spiking Neural Networks (SNNs) have gained attention for their potential to process information more efficiently than traditional artificial neural networks. Spiking Transformers, a specific type of SNN, have shown promise in sequence modeling tasks, but they often struggle with effectively fusing temporal information. To address this limitation, the researchers drew inspiration from the human visual pathway and created TEFormer, a framework that decouples temporal modeling across its core components.

TEFormer introduces a forward temporal fusion mechanism in the attention module, allowing for fully parallel computation. Additionally, it incorporates a backward gated recurrent structure in the Multilayer Perceptron (MLP) to aggregate temporal information in reverse order and reinforce temporal consistency. This bidirectional approach enables TEFormer to better exploit spatiotemporal dependencies.

The researchers conducted extensive experiments across various benchmarks and datasets, demonstrating that TEFormer consistently outperforms other SNN and Spiking Transformer baselines. Furthermore, they evaluated TEFormer under different neural encoding schemes and found that its performance gains remained stable, indicating that the improved temporal modeling directly translates into reliable accuracy improvements across varied spiking representations.

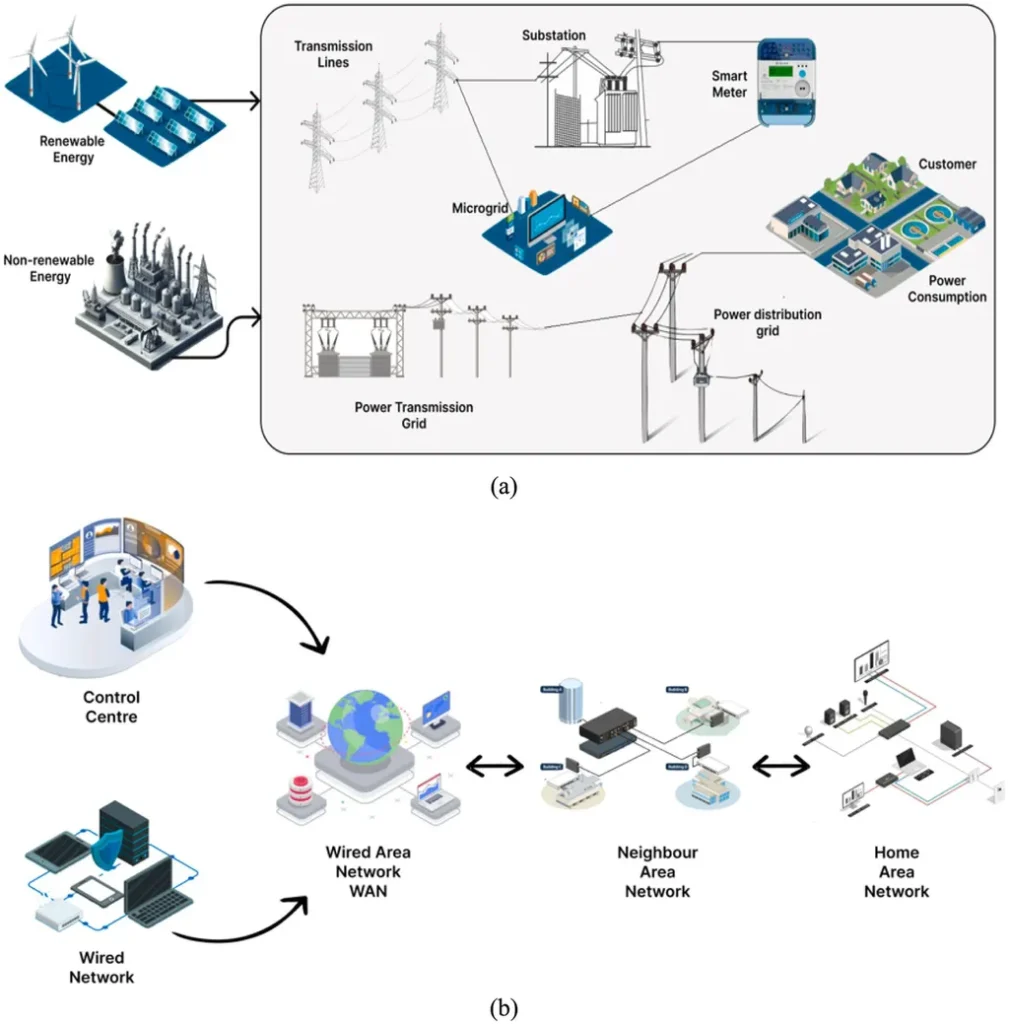

The practical applications of this research for the energy sector are significant. Energy-efficient neural networks like TEFormer can be deployed in edge devices and sensors for real-time monitoring and predictive maintenance in energy infrastructure. They can also be used in smart grid management systems to process and analyze large amounts of temporal data efficiently. Additionally, the improved temporal modeling capabilities of TEFormer can enhance the accuracy of energy demand forecasting and load balancing, contributing to more efficient and sustainable energy management.

This research was published in the journal Nature Machine Intelligence, a reputable source for cutting-edge research in machine learning and artificial intelligence.

This article is based on research available at arXiv.