Researchers from the International Institute of Information Technology, Hyderabad, led by Varad Kulkarni, Vaibhav Jha, Nikhil Reddy, and Yogesh Simmhan, have developed a new approach to optimize cloud-based platforms for complex AI workflows, as published in the journal [IEEE Transactions on Cloud Computing].

The team’s work focuses on addressing the challenges of deploying autonomous AI agents powered by large language models (LLMs) and Model Context Protocol (MCP) servers in the cloud. Traditional hosting methods on virtual machines (VMs) are resource-intensive and lack the flexibility needed for these advanced AI workflows. The researchers propose a new architecture called FAME, which leverages Functions-as-a-Service (FaaS) platforms to offer modularity, autoscaling, and cost efficiency.

FAME decomposes complex agentic patterns into smaller, composable agents, such as Planner, Actor, and Evaluator, each built using LangGraph and orchestrated as a FaaS workflow. This modular approach allows for easier management and avoids the timeouts that can occur with monolithic workflows. To maintain context across user requests in a conversation, FAME automates agent memory persistence and injection using DynamoDB. Additionally, it optimizes MCP server deployment through AWS Lambda wrappers, caches tool outputs in S3, and proposes function fusion strategies to further enhance performance.

The researchers evaluated FAME on two applications: research paper summarization and log analytics. The results were promising, showing up to a 13x reduction in latency, 88% fewer input tokens, and 66% cost savings, along with improved workflow completion rates. This demonstrates the potential of serverless platforms for hosting complex, multi-agent AI workflows at scale.

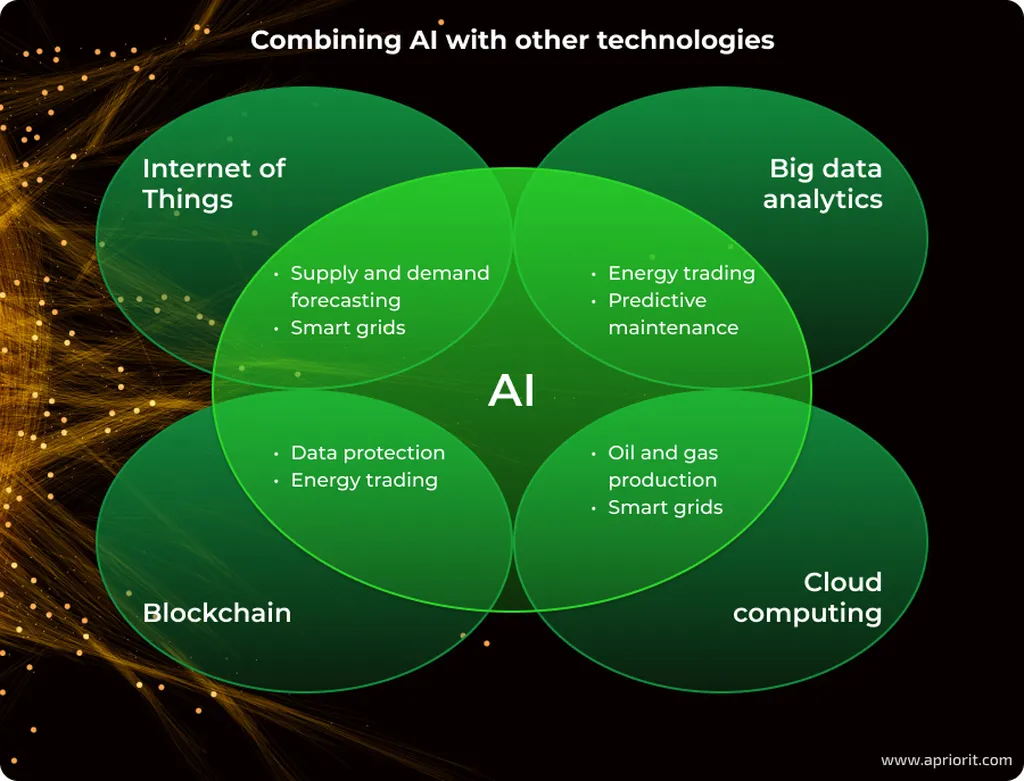

For the energy industry, this research could have significant implications. AI-driven workflows are increasingly being used for tasks such as predictive maintenance, energy consumption forecasting, and grid management. By optimizing the deployment of these workflows on cloud platforms, energy companies could benefit from reduced costs, improved efficiency, and faster processing times. The modular approach proposed by FAME could also make it easier to integrate new AI tools and models as they become available, ensuring that energy companies stay at the forefront of technological advancements.

In conclusion, the FAME architecture offers a promising solution for the scalable deployment of complex AI workflows in the cloud. Its potential applications in the energy sector highlight the importance of continued research and development in this area.

This article is based on research available at arXiv.