Researchers Karim Abdelsalam, Zeyad Gamal, and Ayman El-Badawy from the American University in Cairo have developed a novel approach to controlling complex systems with nonlinear dynamics, a common challenge in various industries, including energy. Their work, published in the journal “IEEE Transactions on Control Systems Technology,” combines data-driven modeling with reinforcement learning to improve control efficiency and robustness.

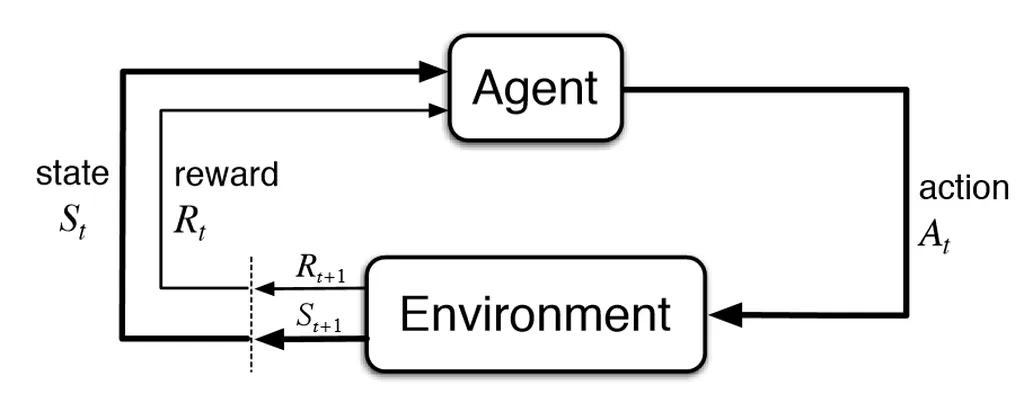

The team proposes a framework called Dyna-Style Reinforcement Learning, which integrates two key components: Sparse Identification of Nonlinear Dynamics (SINDy) and Twin Delayed Deep Deterministic Policy Gradient (TD3) reinforcement learning. SINDy is used to create a data-driven model of the system, capturing its essential dynamics without needing a detailed physical model. This identified model generates synthetic data, which is then used to train the reinforcement learning algorithm alongside real-world data.

By using this hybrid approach, the researchers address a significant limitation of traditional model-free reinforcement learning methods: sample inefficiency. In other words, their method requires less real-world data to achieve effective control. This is particularly valuable in the energy sector, where systems often exhibit complex, nonlinear dynamics, and collecting data can be time-consuming and costly.

The researchers demonstrated the effectiveness of their framework using a bi-rotor system as a case study. They evaluated its performance in stabilization and trajectory tracking, showing that their SINDy-TD3 approach achieved superior accuracy and robustness compared to direct reinforcement learning techniques. This suggests that combining data-driven modeling with reinforcement learning could enhance control strategies for complex systems in various industries, including energy.

For instance, this approach could be applied to control wind turbines, which experience complex, nonlinear dynamics due to varying wind conditions. By improving control strategies, energy companies could enhance turbine performance, increase energy output, and extend the lifespan of their equipment. Similarly, this method could be used to optimize the operation of power grids, which also exhibit complex, nonlinear dynamics. By improving grid control, energy providers could enhance stability, reduce losses, and integrate more renewable energy sources.

In conclusion, the Dyna-Style Reinforcement Learning framework developed by Abdelsalam, Gamal, and El-Badawy presents a promising avenue for improving control strategies in complex systems. By leveraging data-driven modeling and reinforcement learning, energy companies could enhance the performance and robustness of their systems, ultimately contributing to a more efficient and sustainable energy sector.

This article is based on research available at arXiv.