Researchers from the University of Cambridge’s Centre for the Study of Existential Risk, including Chloé Touzet, Henry Papadatos, Malcolm Murray, and their colleagues, have published a comprehensive study on the role of risk modeling in managing the potential hazards posed by advanced artificial intelligence (AI) systems. Their work, published in the journal “Risk Analysis,” emphasizes the need for a robust risk-management infrastructure to address the unique challenges that rapidly advancing AI technologies present.

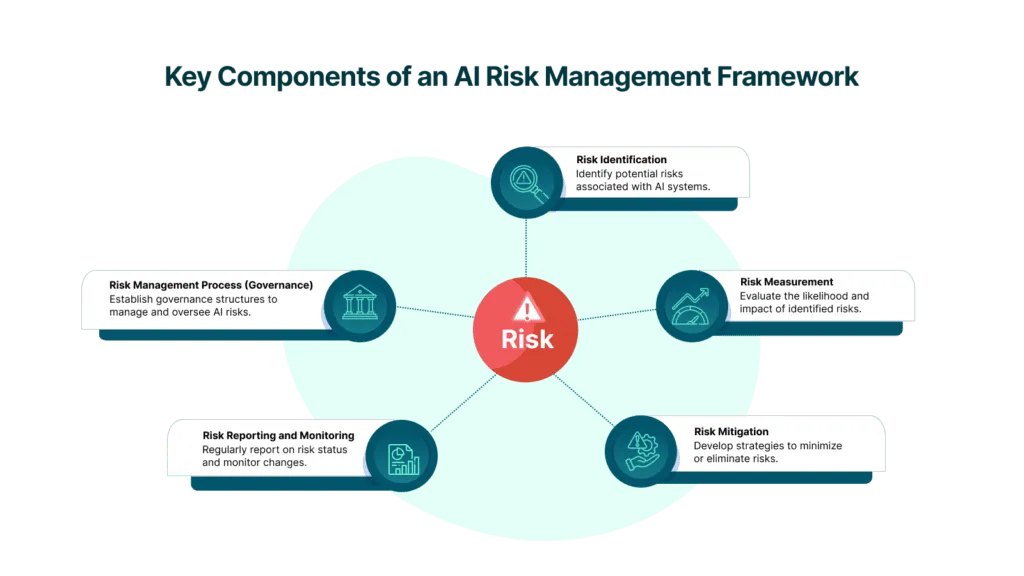

The researchers conceptualize AI risk modeling as a two-part process: scenario building, which involves mapping out potential hazards and their consequences, and risk estimation, which quantifies the likelihood and severity of each identified risk pathway. They review classical risk assessment techniques such as Fault and Event Tree Analyses, Failure Modes and Effects Analysis (FMEA/FMECA), System-Theoretic Process Analysis (STPA), and Bayesian networks, demonstrating how these methods can be adapted for advanced AI systems.

The study highlights a fragmentation in current academic and industry efforts, noting that while capability benchmarks, safety cases, and partial quantitative studies are valuable, they are insufficient when not integrated into comprehensive causal scenarios. The researchers draw parallels with risk management practices in other high-stakes sectors like nuclear energy, aviation, cybersecurity, finance, and submarine operations. They observe that these sectors combine deterministic guarantees for unacceptable events with probabilistic assessments of the broader risk landscape.

The researchers argue that advanced-AI governance should adopt a similar dual approach, emphasizing the need for verifiable, provably-safe AI architectures. They suggest that current AI models, often the result of opaque end-to-end optimization procedures, require more transparent and specified design processes. One proposed governance framework involves developers conducting iterative risk modeling, with regulators comparing the results against predefined societal risk tolerance thresholds.

This study provides a methodological blueprint for integrating sound risk modeling into advanced-AI risk management. It opens a discussion on the best practices for embedding rigorous risk assessment at the heart of AI development and governance, ensuring that the energy industry and other sectors can harness AI’s benefits while mitigating its potential risks.

Source: Touzet, C., Papadatos, H., Murray, M., et al. (2023). The Role of Risk Modeling in Advanced AI Risk Management. Risk Analysis.

This article is based on research available at arXiv.