In the rapidly evolving field of biomedical research, a team of researchers from the University of Science and Technology of China has developed a novel approach to enhance the capabilities of Large Language Models (LLMs). These researchers, led by Zhenchao Tang and including Fang Wang, Haohuai He, and others, have proposed a method called Balanced Fine-Tuning (BFT) to better align LLMs with specialized biomedical knowledge. Their work, published in the journal Nature Machine Intelligence, addresses significant limitations in current approaches to post-training LLMs for biomedical applications.

Effective post-training is crucial for aligning LLMs with the intricate and often sparse textual data that represents complex biomedical mechanisms. Current methods, such as Standard Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL), have notable drawbacks. SFT tends to overfit to surface-level instruction patterns without fully internalizing the fragmented scientific knowledge, while RL is impractical due to the need for experimental validation, which is often time-consuming and costly.

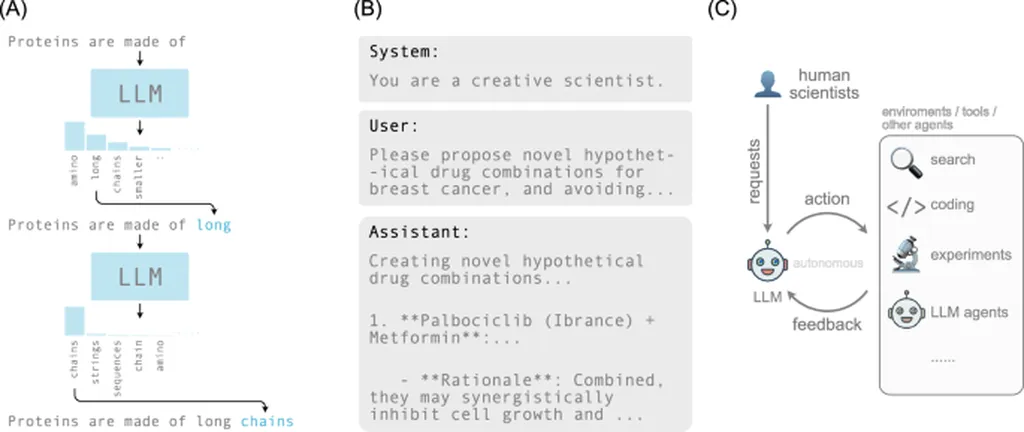

The researchers propose BFT as an efficient post-training method designed to learn complex reasoning from sparse data without external reward signals. BFT operates through a two-layer weighting mechanism. At the token level, it scales loss via prediction probabilities to stabilize gradients and prevent overfitting. At the sample level, it uses “minimum group confidence” to adaptively enhance the learning of hard samples.

Experiments conducted by the researchers demonstrate that BFT significantly outperforms SFT. In medical tasks, BFT enables LLMs to acquire knowledge that SFT misses. In biological tasks, BFT-based LLMs surpass GeneAgent, an accurate agent for biology analysis, in biological process reasoning. Additionally, the text embeddings generated by BFT can be directly applied to downstream tasks, such as gene interaction and single-cell perturbation response prediction.

For the energy sector, the implications of this research are indirect but noteworthy. As energy research increasingly intersects with biomedical applications—such as in the development of biofuels, biotechnology for carbon capture, and health and safety considerations in energy production—the enhanced capabilities of LLMs through BFT could facilitate more efficient and accurate data analysis. This could lead to faster advancements in energy technologies that rely on complex biological processes, ultimately contributing to a more sustainable and innovative energy sector.

The research highlights the potential of BFT to facilitate broad applications of LLMs in biomedical research, with broader implications for fields like energy that benefit from advanced data analysis and reasoning capabilities.

This article is based on research available at arXiv.